bert的基础知识

Bert模型简要介绍 ————— Bert是一个基于深度transformer编码器结构的模型。利用预训练+微调的方式去解决其他的任务,区别于之前多数基于于特征提取的方法(如Elmo、Word2vec)。预训练任务包含两个目标,分别为掩码语言模型以及预测下一个句子的目标。 在下面的文章中,我们会从模型的基础结构和预训练任务开始,逐步深入到Bert的细节,最后再介绍一些Bert的应用。

Bert中的Embeddings

####Bert中的Embeddings包含哪些部分 Bert输入Embeddings包含三个部分,第一部分为word的embeddings,第二部分为position的embeddings,第三部分为token_type的embeddings,具体的代码如下(为了不使得代码冗长,删除了部分不重要的代码)

class TFBertEmbeddings(tf.keras.layers.Layer):

"""从token、token类型以及位置构建embeddings向量"""

def __init__(self, config: BertConfig, **kwargs):

super().__init__(**kwargs)

self.config = config

self.hidden_size = config.hidden_size

self.max_position_embeddings = config.max_position_embeddings

self.initializer_range = config.initializer_range

self.LayerNorm = tf.keras.layers.LayerNormalization(epsilon=config.layer_norm_eps, name="LayerNorm")

self.dropout = tf.keras.layers.Dropout(rate=config.hidden_dropout_prob)

def build(self, input_shape: tf.TensorShape):

with tf.name_scope("word_embeddings"):

self.weight = self.add_weight(

name="weight",

shape=[self.config.vocab_size, self.hidden_size],

initializer=get_initializer(self.initializer_range),

)

with tf.name_scope("token_type_embeddings"):

self.token_type_embeddings = self.add_weight(

name="embeddings",

shape=[self.config.type_vocab_size, self.hidden_size],

initializer=get_initializer(self.initializer_range),

)

with tf.name_scope("position_embeddings"):

self.position_embeddings = self.add_weight(

name="embeddings",

shape=[self.max_position_embeddings, self.hidden_size],

initializer=get_initializer(self.initializer_range),

)

super().build(input_shape)

def call(

self,

input_ids: tf.Tensor = None,

position_ids: tf.Tensor = None,

token_type_ids: tf.Tensor = None,

inputs_embeds: tf.Tensor = None,

past_key_values_length=0,

training: bool = False,

) -> tf.Tensor:

if input_ids is None and inputs_embeds is None:

raise ValueError("Need to provide either `input_ids` or `input_embeds`.")

input_shape = shape_list(inputs_embeds)[:-1]

if token_type_ids is None:

token_type_ids = tf.fill(dims=input_shape, value=0)

if position_ids is None:

position_ids = tf.expand_dims(

tf.range(start=past_key_values_length, limit=input_shape[1] + past_key_values_length), axis=0

)

position_embeds = tf.gather(params=self.position_embeddings, indices=position_ids)

token_type_embeds = tf.gather(params=self.token_type_embeddings, indices=token_type_ids)

final_embeddings = inputs_embeds + position_embeds + token_type_embeds

final_embeddings = self.LayerNorm(inputs=final_embeddings)

final_embeddings = self.dropout(inputs=final_embeddings, training=training)

return final_embeddings

最终输出的embeddings为三个embeddings相加,同时加上了LayerNorm和dropout,这段代码的模型结构有两个知识点:

LayerNorm:LayerNorm的作用是对每个样本embedding的每个维度进行归一化,使得每个特征的均值为0,方差为1

dropout:dropout的作用是在训练的时候随机的将一些神经元置为0,使得模型不会过拟合,dropout在training和inference时的行为是不一样的,training时会随机的将一些神经元置为0,inference时不会做任何操作,这里的training参数就是用来控制这个行为的

position_ids和token_type_ids在模型中起到什么作用了?position_ids是用来表示每个token的位置的,token_type_ids是用来表示每个token的类型的,其中position_ids在Attention is All you need的原始论文中引入,主要解决自注意力机制不能够区分位置信息的问题,token_type_ids是Bert中引入的,主要解决Bert中的句子对任务的问题,用来区别是第一个句子还是第二个句子。 以bert-base-chinese为例,包含的vocab字典大小为21128,token_type为2,max_position为512,embedding的长度为768,Embedding层的参数量为(21128+2+512)*768=16267776,这里的参数量是指Embedding层的参数量,不包含LayerNorm和dropout的参数量,LayerNorm的参数量为768*2=1536,dropout的参数量为0,所以Embedding层的总参数量为16269312 ####Bert中Embeddings的input_ids,position_ids,token_type_ids怎么生成的 ####WordPiece tokenization算法 WordPiece tokenization算法是一种基于字典的分词算法,是Google为Bert开发的一种分词方法,后来的DistilBert,MobileBert等模型也是基于WordPiece的分词方法。它的基本思想是将一个词分成多个字,然后根据字典来进行分词,这里的字典是指WordPiece的字典,WordPiece的字典是通过训练语料来得到的,具体的算法可以参见Huggingface中WordPiece tokenization的教程,这里做个简单的介绍: 1、把word按照字母切分,加上前缀(bert是##的前缀),如 word ==> w ##o ##r ##d 2、合并阶段,合并的时候,不同于BPE,合并准则为:score=(freq_of_pair)/(freq_of_first_element×freq_of_second_element),按照分数高低合并,直到达到设定的词典大小 利用字典分词的时候,利用最大匹配的方式去分词,如果不在词典中,则返回’[UNK]’特殊字符,具体的代码如下:

def encode_word(word):

tokens = []

while len(word) > 0:

i = len(word)

while i > 0 and word[:i] not in vocab:

i -= 1

if i == 0:

return ["[UNK]"]

tokens.append(word[:i])

word = word[i:]

if len(word) > 0:

word = f"##{word}"

return tokens

假设vocab为:[‘[PAD]’, ‘[UNK]’, ‘[CLS]’, ‘[SEP]’, ‘[MASK]’, ‘##a’, ‘##b’, ‘##c’, ‘##d’, ‘##e’, ‘##f’, ‘##g’, ‘##h’, ‘##i’, ‘##k’,’##l’, ‘##m’, ‘##n’,’##o’, ‘##p’, ‘##r’, ‘##s’, ‘##t’, ‘##u’, ‘##v’, ‘##w’, ‘##y’, ‘##z’, ‘,’, ‘.’, ‘C’, ‘F’, ‘H’, ‘T’, ‘a’, ‘b’, ‘c’, ‘g’, ‘h’, ‘i’, ‘s’, ‘t’, ‘u’, ‘w’, ‘y’, ‘ab’, ‘##fu’, ‘Fa’, ‘Fac’, ‘##ct’, ‘##ful’, ‘##full’, ‘##fully’, ‘Th’, ‘ch’, ‘##hm’, ‘cha’, ‘chap’, ‘chapt’, ‘##thm’, ‘Hu’, ‘Hug’, ‘Hugg’, ‘sh’, ‘th’, ‘is’, ‘##thms’,’##za’, ‘##zat’, ‘##ut’] 那么encode_word(“Hugging”) => [‘Hugg’, ‘##i’, ‘##n’, ‘##g’];encode_word(“HOgging”) => [‘[UNK]’] embedding的生成如下图所示

当前汉语Bert预训练模型一般直接用的是汉字,在利用WordPiece的时候,会事先在每个汉字周边都插入空格,对每个字分成一个词,因此最终的效果等价于每个汉字会是一个词。 ####利用Transformer中的Tokenizer实现对中文的分词处理 AutoTokenizer是Huggingface Transformer中的一个类,可以根据模型的名称自动的选择对应的Tokenizer,这里以bert-base-chinese为例,代码如下: tokenizer = AutoTokenizer.from_pretrained(‘model_dir/bert_base_chinese’) 这里我们假设下载好的模型存在model_dir/bert_base_chinese的文件夹中,AutoTokenizer会自动的选择BertTokenizer,然后加载对应的词典,利用相应的词典就可以对语料进行编码。 为了用实际的数据集例子,我们介绍一下golden-horse数据集,这个数据集是一个中文命名实体识别的数据集,可以在github搜索下载。数据集的处理代码如下:

import pandas as pd

train_file = 'data/weiboNER_2nd_conll.train'

dev_file = 'data/weiboNER_2nd_conll.dev'

test_file = 'data/weiboNER_2nd_conll.test'

sentence_id = 0

def encode(s):

global sentence_id

if pd.isna(s):

sentence_id += 1

return None

else:

return str(sentence_id)

def get_dataset(filename, output_label=False):

data = pd.read_csv(filename, sep='\t', header=None, names=['token', 'POS'], skip_blank_lines=False,encoding='utf-8')

data['word'] = data['token'].fillna('').map(lambda i: i[:-1])

data['sentence'] = data['token'].apply(encode)

data.dropna(how="any", inplace=True)

if output_label:

label2id = {k: v for v, k in enumerate(data.POS.unique())}

id2label = {v: k for v, k in enumerate(data.POS.unique())}

# let's create a new column called "sentence" which groups the words by sentence

data['Sentence'] = data[['sentence','word','POS']].groupby(['sentence'])['word'].transform(lambda x: ''.join(x))

# let's also create a new column called "word_labels" which groups the tags by sentence

data['word_labels'] = data[['sentence','word','POS']].groupby(['sentence'])['POS'].transform(lambda x: ','.join(x))

data = data[["Sentence", "word_labels"]].drop_duplicates().reset_index(drop=True)

if output_label:

return data, label2id, id2label

else:

return data

train_dataset, label2id, id2label = get_dataset(train_file, True)

test_dataset = get_dataset(test_file)

dev_dataset = get_dataset(dev_file)

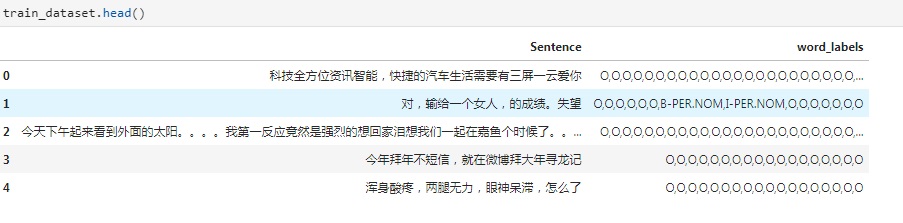

处理后的数据格式如下:

为了得到编码后的token_id,我们只需要一句代码就可以实现:

为了得到编码后的token_id,我们只需要一句代码就可以实现:

encodings = tokenizer(sentence,padding=True, truncation=True,max_length=30, return_attention_mask=True,return_offsets_mapping=True)

上述代码确定了最大长度为30,如果句子长度不足30,则会自动补充[PAD],如果句子长度超过30,则会自动截断(实际上会自动上述代码会自动在每个句子插入一个特殊的开始字符[CLS]和结束字符[SEP],因此最大的句子长度为28)。返回的encodings是一个字典,包含了input_ids, attention_mask, token_type_ids, offset_mapping四个参数,其中input_ids就是我们需要的编码后的token_id。attention_mask的作用是告诉模型哪些是真实的输入,哪些是[PAD],这样模型就不会把[PAD]的部分也当做输入进行计算。

offset_mapping是一个数组,数组的元素一个二元的元组。这个元组的两个元素分别表示token_id的起始位置和结束位置,主要用来对应原始的词以及其标签和编码后的token_id,因为可能一个单词在编码后会对应多个token_id,这个时候,之前的标签就和token_id不能一一对应,需要我们利用offset_mapping去修复。具体的修复代码如下:

```python

import numpy as np

def encode_tags(tags, encodings):

labels = [[label2id[tag] for tag in doc.split(',')] for doc in tags]

encoded_labels = []

for doc_labels, doc_offset in zip(labels, encodings.offset_mapping):

doc_enc_labels = np.ones(len(doc_offset),dtype=int) * -100 # label -100模型不会训练

for i, (beg, end) in enumerate(doc_offset):

if end != 0:

doc_enc_labels[i] = doc_labels[beg]

encoded_labels.append(doc_enc_labels.tolist())

return encoded_labels

encoded_labels = encode_tags(labels, encodings)

###注意力网络 注意力结构有各种各种,应用比较广泛的是Transformer论文中提出的基于缩放的内积注意力机制,其公式如下: \(attn(Q,K,V) = softmax(\frac{QK^T}{\sqrt{d_k}})V\) 对于每一个向量$q_{i}, k_{j}$: \(a_{ij} = softmax(\frac{q_{i}k_{j}^T}{\sqrt{d_k}}) = \frac{exp(q_{i}k_{j}^T)}{\sqrt{d_k}\sum_{j=1}^{n}exp(q_{i}k_{j}^T)}\) 对每一个i,计算j的注意力权重$a_{ij}$,然后对相应的$v_{j}$进行加权求和,得到$q_{i}$所对应的向量输出 ###多头注意力网络 多头注意力结构是Transformer中的关键结构,相对于注意力结构来讲,多头注意力网络把输入矩阵映射到多个小的子空间中去,在各个子空间中计算注意力,多个注意力网络的输出直接拼接起来,并通过线性映射到期望的维度上,其公式如下: \(MultiHead(Q,K,V) = Concat(head_{1},...,head_{h})W^{O}\) \(head_{i}=Attention(QW_{i}^{Q},KW_{i}^{K},VW_{i}^{V})\) 这里的$W_{i}^{Q},W_{i}^{K},W_{i}^{V}$是线性映射的权重矩阵,$h$是头的个数,$W_{i}^{Q},W_{i}^{K} \in R^{d\times d_k/h},W_{i}^{V} \in R^{d\times d_v/h}$是权重矩阵,将输入的embedings从$L\times {d}$的矩阵分别转换为query、key和value矩阵。$W_{O}\in R^{d_v \times d}$是最后的线性映射权重矩阵,$d_{k}$是每个头的维度,$d_{v}$是输出的维度,$d_{k}=d_{v}=d_{model}$,$d_{model}$是注意力网络输入的维度。多头注意力网络看起来比注意力机制要复杂不少,需要多个矩阵乘法,但是实际上,多头注意力网络的计算量和注意力机制是一样的,实现的时候可以用一个矩阵乘法来实现。 ###Transformers中Bert的attention代码 下面我们来具体看看Bert中attention是怎么实现的,以便能够更好的理解attention结构,为了方便理解,我删除了一些代码,只保留了关键的部分,Bert编码器结构和原始的Transformer结构一致,大家可以对照着原始的attention结构图来理解下面的代码

class TFBertSelfAttention(tf.keras.layers.Layer):

def __init__(self, config: BertConfig, **kwargs):

super().__init__(**kwargs)

#这里的hidden_size就是上文说的d_model,num_attention_heads就是h

if config.hidden_size % config.num_attention_heads != 0:

raise ValueError(

f"The hidden size ({config.hidden_size}) is not a multiple of the number "

f"of attention heads ({config.num_attention_heads})"

)

self.num_attention_heads = config.num_attention_heads

self.attention_head_size = int(config.hidden_size / config.num_attention_heads)

self.all_head_size = self.num_attention_heads * self.attention_head_size

self.sqrt_att_head_size = math.sqrt(self.attention_head_size)

self.query = tf.keras.layers.Dense(

units=self.all_head_size, kernel_initializer=get_initializer(config.initializer_range), name="query"

)

self.key = tf.keras.layers.Dense(

units=self.all_head_size, kernel_initializer=get_initializer(config.initializer_range), name="key"

)

self.value = tf.keras.layers.Dense(

units=self.all_head_size, kernel_initializer=get_initializer(config.initializer_range), name="value"

)

self.dropout = tf.keras.layers.Dropout(rate=config.attention_probs_dropout_prob)

def transpose_for_scores(self, tensor: tf.Tensor, batch_size: int) -> tf.Tensor:

# Reshape from [batch_size, seq_length, all_head_size] to [batch_size, seq_length, num_attention_heads, attention_head_size]

tensor = tf.reshape(tensor=tensor, shape=(batch_size, -1, self.num_attention_heads, self.attention_head_size))

# Transpose the tensor from [batch_size, seq_length, num_attention_heads, attention_head_size] to [batch_size, num_attention_heads, seq_length, attention_head_size]

return tf.transpose(tensor, perm=[0, 2, 1, 3])

def call(

self,

hidden_states: tf.Tensor,

attention_mask: tf.Tensor,

head_mask: tf.Tensor,

encoder_hidden_states: tf.Tensor,

encoder_attention_mask: tf.Tensor,

output_attentions: bool,

training: bool = False,

) -> Tuple[tf.Tensor]:

batch_size = shape_list(hidden_states)[0]

mixed_query_layer = self.query(inputs=hidden_states)

key_layer = self.transpose_for_scores(self.key(inputs=hidden_states), batch_size)

value_layer = self.transpose_for_scores(self.value(inputs=hidden_states), batch_size)

query_layer = self.transpose_for_scores(mixed_query_layer, batch_size)

# 计算"query"和"key" 的内积获取原始的attention分数

# (batch size, num_heads, seq_len_q, seq_len_k)

attention_scores = tf.matmul(query_layer, key_layer, transpose_b=True)

dk = tf.cast(self.sqrt_att_head_size, dtype=attention_scores.dtype)

attention_scores = tf.divide(attention_scores, dk)

if attention_mask is not None:

# Apply the attention mask is (precomputed for all layers in TFBertModel call() function)

attention_scores = tf.add(attention_scores, attention_mask)

# 将attention分数归一化获取概率值

attention_probs = stable_softmax(logits=attention_scores, axis=-1)

attention_probs = self.dropout(inputs=attention_probs, training=training)

if head_mask is not None:

attention_probs = tf.multiply(attention_probs, head_mask)

attention_output = tf.matmul(attention_probs, value_layer)

attention_output = tf.transpose(attention_output, perm=[0, 2, 1, 3])

# (batch_size, seq_len_q, all_head_size)

attention_output = tf.reshape(tensor=attention_output, shape=(batch_size, -1, self.all_head_size))

outputs = (attention_output, attention_probs) if output_attentions else (attention_output,)

return outputs

class TFBertSelfOutput(tf.keras.layers.Layer):

def __init__(self, config: BertConfig, **kwargs):

super().__init__(**kwargs)

self.dense = tf.keras.layers.Dense(

units=config.hidden_size, kernel_initializer=get_initializer(config.initializer_range), name="dense"

)

self.LayerNorm = tf.keras.layers.LayerNormalization(epsilon=config.layer_norm_eps, name="LayerNorm")

self.dropout = tf.keras.layers.Dropout(rate=config.hidden_dropout_prob)

def call(self, hidden_states: tf.Tensor, input_tensor: tf.Tensor, training: bool = False) -> tf.Tensor:

hidden_states = self.dense(inputs=hidden_states)

hidden_states = self.dropout(inputs=hidden_states, training=training)

hidden_states = self.LayerNorm(inputs=hidden_states + input_tensor)

return hidden_state

class TFBertAttention(tf.keras.layers.Layer):

def __init__(self, config: BertConfig, **kwargs):

super().__init__(**kwargs)

self.self_attention = TFBertSelfAttention(config, name="self")

self.dense_output = TFBertSelfOutput(config, name="output")

def call(

self,

input_tensor: tf.Tensor,

attention_mask: tf.Tensor,

head_mask: tf.Tensor,

encoder_hidden_states: tf.Tensor,

encoder_attention_mask: tf.Tensor,

output_attentions: bool,

training: bool = False,

) -> Tuple[tf.Tensor]:

self_outputs = self.self_attention(

hidden_states=input_tensor,

attention_mask=attention_mask,

head_mask=head_mask,

encoder_hidden_states=encoder_hidden_states,

encoder_attention_mask=encoder_attention_mask,

output_attentions=output_attentions,

training=training,

)

attention_output = self.dense_output(

hidden_states=self_outputs[0], input_tensor=input_tensor, training=training

)

# 输出attention分数

outputs = (attention_output,) + self_outputs[1:]

return outputs

class TFBertIntermediate(tf.keras.layers.Layer):

def __init__(self, config: BertConfig, **kwargs):

super().__init__(**kwargs)

self.dense = tf.keras.layers.Dense(

units=config.intermediate_size, kernel_initializer=get_initializer(config.initializer_range), name="dense"

)

if isinstance(config.hidden_act, str):

self.intermediate_act_fn = get_tf_activation(config.hidden_act)

else:

self.intermediate_act_fn = config.hidden_act

def call(self, hidden_states: tf.Tensor) -> tf.Tensor:

hidden_states = self.dense(inputs=hidden_states)

hidden_states = self.intermediate_act_fn(hidden_states)

return hidden_states

class TFBertOutput(tf.keras.layers.Layer):

def __init__(self, config: BertConfig, **kwargs):

super().__init__(**kwargs)

self.dense = tf.keras.layers.Dense(

units=config.hidden_size, kernel_initializer=get_initializer(config.initializer_range), name="dense"

)

self.LayerNorm = tf.keras.layers.LayerNormalization(epsilon=config.layer_norm_eps, name="LayerNorm")

self.dropout = tf.keras.layers.Dropout(rate=config.hidden_dropout_prob)

def call(self, hidden_states: tf.Tensor, input_tensor: tf.Tensor, training: bool = False) -> tf.Tensor:

hidden_states = self.dense(inputs=hidden_states)

hidden_states = self.dropout(inputs=hidden_states, training=training)

hidden_states = self.LayerNorm(inputs=hidden_states + input_tensor)

return hidden_states

class TFBertLayer(tf.keras.layers.Layer):

def __init__(self, config: BertConfig, **kwargs):

super().__init__(**kwargs)

self.attention = TFBertAttention(config, name="attention")

self.intermediate = TFBertIntermediate(config, name="intermediate")

self.bert_output = TFBertOutput(config, name="output")

def call(

self,

hidden_states: tf.Tensor,

attention_mask: tf.Tensor,

head_mask: tf.Tensor,

encoder_hidden_states: Optional[tf.Tensor],

encoder_attention_mask: Optional[tf.Tensor],

output_attentions: bool,

training: bool = False,

) -> Tuple[tf.Tensor]:

self_attention_outputs = self.attention(

input_tensor=hidden_states,

attention_mask=attention_mask,

head_mask=head_mask,

encoder_hidden_states=None,

encoder_attention_mask=None,

output_attentions=output_attentions,

training=training,

)

attention_output = self_attention_outputs[0]

outputs = self_attention_outputs[1:] # 如果选择输出attention分数的话,把分数也输出

intermediate_output = self.intermediate(hidden_states=attention_output)

layer_output = self.bert_output(

hidden_states=intermediate_output, input_tensor=attention_output, training=training

)

outputs = (layer_output,) + outputs

return outputs

class TFBertEncoder(tf.keras.layers.Layer):

def __init__(self, config: BertConfig, **kwargs):

super().__init__(**kwargs)

self.config = config

self.layer = [TFBertLayer(config, name=f"layer_._{i}") for i in range(config.num_hidden_layers)]

def call(

self,

hidden_states: tf.Tensor,

attention_mask: tf.Tensor,

head_mask: tf.Tensor,

encoder_hidden_states: Optional[tf.Tensor],

encoder_attention_mask: Optional[tf.Tensor],

output_attentions: bool,

output_hidden_states: bool,

return_dict: bool,

training: bool = False,

) -> Union[TFBaseModelOutputWithPastAndCrossAttentions, Tuple[tf.Tensor]]:

all_hidden_states = () if output_hidden_states else None

all_attentions = () if output_attentions else None

for i, layer_module in enumerate(self.layer):

if output_hidden_states:

all_hidden_states = all_hidden_states + (hidden_states,)

layer_outputs = layer_module(

hidden_states=hidden_states,

attention_mask=attention_mask,

head_mask=head_mask[i],

encoder_hidden_states=encoder_hidden_states,

encoder_attention_mask=encoder_attention_mask,

output_attentions=output_attentions,

training=training,

)

hidden_states = layer_outputs[0]

if output_attentions:

all_attentions = all_attentions + (layer_outputs[1],)

# 加上最后一层的hidden_states

if output_hidden_states:

all_hidden_states = all_hidden_states + (hidden_states,)

if not return_dict:

return tuple(

v for v in [hidden_states, all_hidden_states, all_attentions] if v is not None

)

return TFBaseModelOutputWithPastAndCrossAttentions(

last_hidden_state=hidden_states,

past_key_values=next_decoder_cache,

hidden_states=all_hidden_states,

attentions=all_attentions

)

看完BertLayer的结构我们就能整体的理解Bert模型的参数配置了。在Transformers的开源模型中,模型结构配置在config.json,比如bert_base模型的配置如下所示: { “architectures”: [ “BertForMaskedLM” ], “attention_probs_dropout_prob”: 0.1, “gradient_checkpointing”: false, “hidden_act”: “gelu”, “hidden_dropout_prob”: 0.1, “hidden_size”: 768, “initializer_range”: 0.02, “intermediate_size”: 3072, “layer_norm_eps”: 1e-12, “max_position_embeddings”: 512, “model_type”: “bert”, “num_attention_heads”: 12, “num_hidden_layers”: 12, “pad_token_id”: 0, “position_embedding_type”: “absolute”, “transformers_version”: “4.6.0.dev0”, “type_vocab_size”: 2, “use_cache”: true, “vocab_size”: 30522 } 从上面的配置中我们可以看到,我们可以计算出bert base的参数量构成:

- 词嵌入层: vocab_size (30,522) * hidden_size (768) = 23,440,896

- 位置嵌入层: max_position_embeddings (512) * hidden_size (768) = 393,216

- 词类型嵌入层: type_vocab_size (2) * hidden_size (768) = 1,536

之后,我们需要计算每个Transformer层的参数量,然后将其乘以层数(12层)。 在每个Transformer层中,参数量来自于以下组件:

自注意力层:

- Q矩阵: hidden_size (768) * hidden_size (768) = 589,824

- K矩阵: hidden_size (768) * hidden_size (768) = 589,824

- V矩阵: hidden_size (768) * hidden_size (768) = 589,824

- 输出: hidden_size (768) * hidden_size (768) = 589,824

- 层归一化: 2 * hidden_size (768) = 1,536 * 2 = 3,072

前馈神经网络(FFN):

- 第一层: hidden_size (768) * intermediate_size (3,072) = 2,359,296

- 第二层: intermediate_size (3,072) * hidden_size (768) = 2,359,296

- 层归一化 (FFN后面的):2* hidden_size (768) =1,536 * 2 = 3,072

然后我们将参数相加:每个Transformer层的参数量: 2,359,296 (自注意力层) + 3,072 (层归一化) + 4,718,592 (前馈神经网络) + 3,072 (层归一化)= 7,084,032。总参数量: 23,440,896 (词嵌入) + 393,216 (位置嵌入) + 1,536 (类型嵌入) + (7,084,032 * num_hidden_layers) = 23,440,896 + 393,216 + 1,536 + 84,648,384 = 108,483,032,计算出来bert base总参数量大约为110M。这里计算出的参数量略低于实际值,这是因为我们没有考虑一些额外的参数,比如最后的分类层。 ####Bert预训练任务